Media Center

A multimedia mosaic of moments at GIST

GIST Excellence

GIST College students published international papers on research affecting everyday life

- 엘리스 리

- REG_DATE : 2015.09.16

- HIT : 1065

Drones, intelligent CCTV, patient-behavior detection cameras...

GIST College students published international papers

on research affecting everyday life

(from left) GIST College students Seung Chae, Insu Kim, Yuri Ku

and Liberal Arts and Sciences professor Kin Choong Yow

The results of research performed by three GIST College students under the supervision and guidance of Professor Kin Choong Yow has recently been published internationally.

Insu Kim is a senior EECS major and published his research on drones, and Yuri Ku is a senior EECS major and published her research on intelligent CCTV. Their papers were published at the Ninth International Conference on Mobile Ubiquitous Computing Systems conference that was held in Nice, France, between July 19 - 24, 2015.

Seung Chae is a junior EECS major who also published his research on an algorithm that uses CCTVs to detect abnormal behavior in hospital patients that would indicated to medical personnel that they may need immediate medical attention. His paper was published at the Fifth International Conference on Ambient Computing, Applications, Services and Technologies that was also held in Nice, France, between July 19 - 24, 2015.

The students conducted their research for the past year under the supervision of professor Kin Choong who is from Singapore. Professor Kin Choong Yow received his Ph.D. from Cambridge University in England and taught at the Nanyang Technological University in Singapore and at the Shenzhen Institutes of Advanced Technology of China before joining GIST College in 2013.

It is rare for undergraduate students to attend international conferences to present their research, and the GIST students received a great deal of favorable interest from foreign researchers.

Insu Kim presented an algorithm that can accurately calculate the distance of a person to a specific object from images taken by a drone that is moving in a consistent flight pattern. Normally, algorithms that can calculate distance usually require images obtained from two or more cameras; however, calculation errors increase if the cameras are closer together. Therefore, to increase accuracy, the cameras must be placed further apart.

Insu Kim solved this problem by using just one camera attached to a drone. He programed the drone to hover one meter above the ground and captured images of a person while the drove moved horizontally.

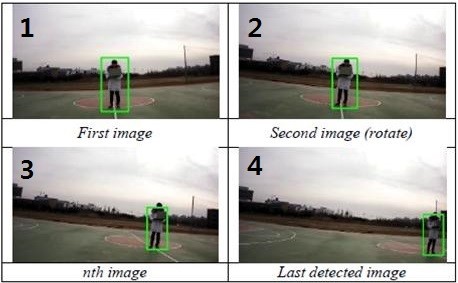

Figure 1. Drone captures pictures as it moves horizontally.

From the captured images of 1, 2 and 4, the algorithm will detect pixels, angles, and the distance flown by the drone and then accurately calculate the distance of that person from the drone.

Unlike the typical algorithms requiring multiple camera, Kim’s method only uses one low-cost drone with a single camera attached making the system simple. Moreover, this algorithm can also be used with vehicles, animals, and various other objects.

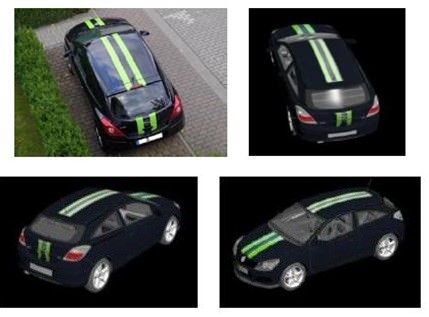

Yuri Ku presented her research on an intelligent CCTV algorithm that can predict how a targeted vehicle would look when captured on CCTV. First, imagine that a car being tracked has disappeared off screen after being captured on CCTV. From those captured images, key features such as stickers, patterns, color, design, model, etc. are extracted. These elements are then used as UVW mapping on the same or similar 3D model. The algorithm can then rotate the 3D model of the vehicle to predict how the car would look at different angles.

Figure 2. (top left) Actual CCTV captured image. (top right) 3D modeled car by the algorithm. (bottom two) expected views of different angles captured by CCTV.

With this algorithm, one can predict the appearance of a vehicle at another CCTV location and can then quickly match the predicted image of the vehicle with the targeted vehicle as soon as the targeted vehicles enters the CCTV"s field of view.

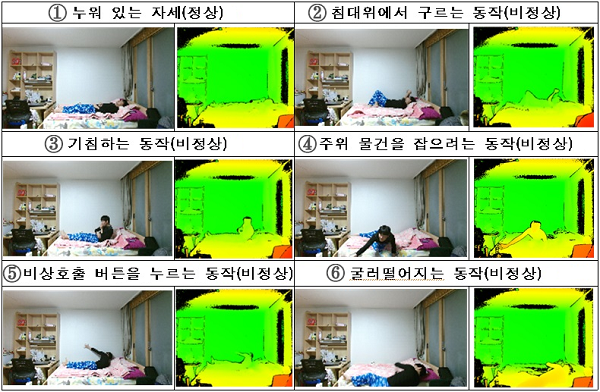

Seung Chae proposed an algorithm that can help healthcare providers detect abnormal movements for hospital patients, especially those who are elderly or disables, which could be used in conjunction with healthcare services under an Ambient Assisted Living environment.

Conventional movement-recognition technology is normally limited to only using image data obtained with RGB sensors, but Chae added an infrared sensor using Microsoft"s Kinect gaming hardware to calculate the distance and movements of a patient.

Figure 3. Standardizing data in a dorm room. ① lying down (normal) ② rolling (abnormal) ③ coughing (abnormal) ④ move to grab something (abnormal) ⑤ pressing call button (abnormal) ⑥ rolling on the floor (abnormal)

Chae captured six images of himself portraying a patient in his dorm room as his baseline data. He then extracted different movements through Harris Corner detection, and, with that, he developed an algorithm to detect abnormal movements a real patient in distress might make.

Conventional detecting algorithm normally uses a sensor that has to be physically attached to the patient, but such sensors are not required in Chae’s algorithm to detect a patient’s movements.

Professor Yow said, “No matter how simple the research question may be, every new answer and discovery motivated my students to excel at their research, and that lead to their good results. Here at GIST, we have an educational system that provides many opportunities to undergraduate students who have the curiosity and the enthusiasm to actively pursue their own research interests with the help of a faculty advisor.”